Driller: RubyGem

Glad to announce Ruby based web crawler ‘Driller‘ to crawl website for error pages and slow pages.

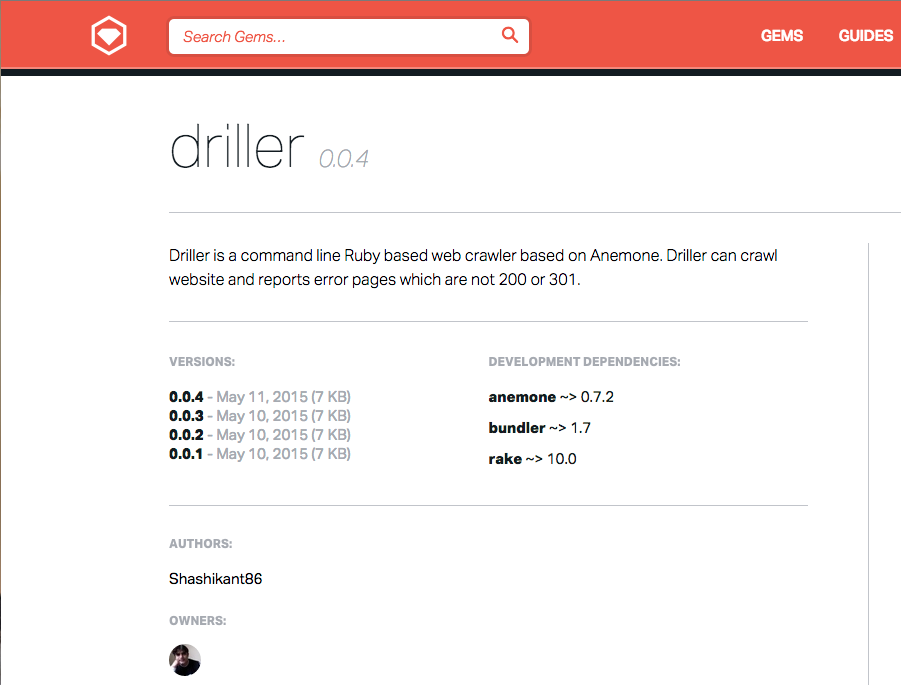

Driller is a command line Ruby based web crawler based on Anemone. Driller can

- Crawl website and reports error pages which are not 200 or 301. This will report all other HTTP codes.

- Driller will report slow pages which are returned response time > 5000

- This will create three HTML files valid_urls.html which are 200 response. broken.html wich are not 200. slow_pages.html which are retuned reaponse time > 5000

You can download ‘Driller’ from Rubygems website here. Initials version has been just published

driller (0.0.4): Driller is a command line Ruby based web crawler based on Anemone. Driller can crawl website and… http://t.co/ApsRXeMPJ8

— RubyGems (@rubygems) May 11, 2015

Usage

Add this line to your application’s Gemfile:

gem 'driller'

And then execute:

$ bundle install

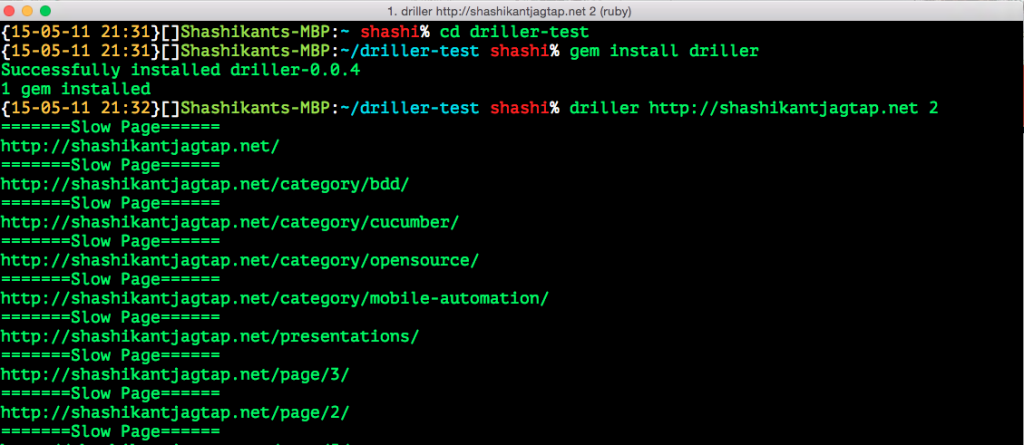

Or install it yourself as:

$ gem install driller

Driller takes two arguments

- URL of the page to be crawled Depth of the crawling

$ driller http://www.example.com 2

If you have installed it from bundle the

$ bundle exec driller http://www.example.com 2

This will crawl website upto level 2. You can increase depth as per your need.

Report

This will create three HTML files valid_urls.html which are 200 response. broken.html wich are not 200. slow_pages.html which are retuned reaponse time > 5000 You an display these html files to CI server.

In command line it look like this :

Why Driller?

Driller is based on Anemone but it has some benefits from other Ruby based crawler

- Driller can be used from Command line

- Driller reports slow pages in the terminal console

- Driller created reports in HTML files which can be configured with CI servers like Jenkins.

Source Code

You can find source code on GitHub here

https://github.com/Shashikant86/driller

Hope you will like Driller and use for checking your website error pages and slow pages.