Superagentic AI is proud to announce the RLM Code today. Recursive Language Models (RLMs) are the hot topic at the moment, If you are following the long-context debate, you have probably seen the split:

- one side says Recursive Language Models (RLMs) are a real shift in inference-time reasoning

- the other side says this is mostly a repackaging of coding-agent patterns like Codex, Cursor etc

Both sides raise valid points. What is usually missing is a reproducible way to test the claims yourself. RLM Code is built for exactly that.It is not a consumer assistant. It is not an enterprise orchestration suite. It is a research playground for running, comparing, and stress-testing RLM-style workflows.

Why RLMs matters

Recent discussion around RLMs has focused on one core claim: long-context reasoning should be handled through symbolic

recursion in code, not by repeatedly stuffing more text into the context window.

A commonly described RLM pattern includes three constraints:

- Treat long prompt/context as a symbolic object in the environment.

- Force the model to write code that recursively calls LMs for subproblems.

- Return subcall results into variables, not into ever-growing chat history.

Whether that difference is revolutionary or incremental is exactly what should be tested, not argued.

Who this is for

- ML researchers testing long-context strategies

- applied AI engineers evaluating recursive reasoning loops

- framework authors who want side-by-side comparisons across paradigms

- teams running controlled benchmark experiments before making product bets

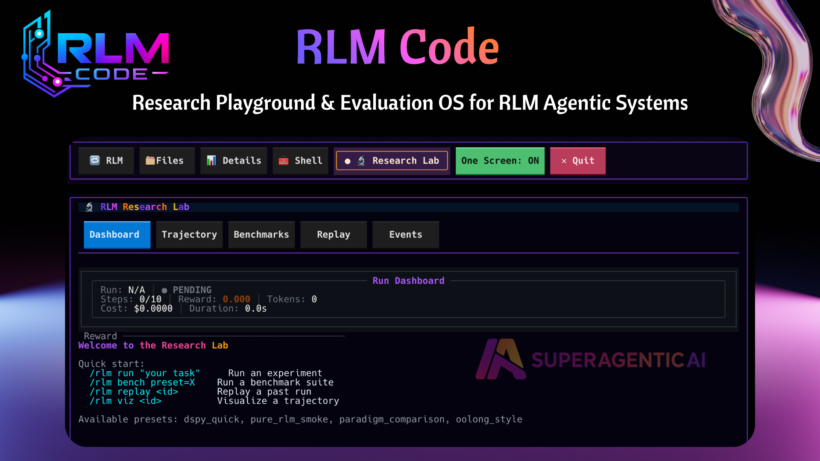

What RLM Code is

RLM Code is a research playground and evaluation OS for recursive language-model workflows.

It gives you:

- a terminal-first research interface

- paper-style pure RLM execution paths

- benchmark presets and custom benchmark packs

- trajectory replay and event-level introspection

- multi-sandbox execution with security controls

- framework adapters under one registry

- pluggable observability sinks

What is included in RLM Code

1. Research-first TUI

A dedicated Research Lab tab with:

- Dashboard

- Trajectory viewer

- Benchmarks

- Replay

- Live events

2. Benchmark system

Built-in presets (including paper-oriented styles), leaderboard metrics, comparison reports, and artifact export.

3. Sandbox runtime layer (Superbox)

Runtime selection and fallback across:

- Docker (recommended secure default)

- Apple Container (macOS)

- cloud options like Modal, E2B, Daytona

- Local only for development

Pure exec is explicit opt-in with acknowledgment.

4. Framework adapter registry

Adapter-ready execution for:

- DSPy RLM

- ADK-style RLM paths

- Pydantic AI

- Google ADK

- DeepAgents

5. Observability integrations

Pluggable sinks for:

- Local JSONL

- MLflow

- OpenTelemetry

- LangSmith

- LangFuse

- Logfire

You can run multiple sinks at once and verify from TUI.

Product demo

Watch the demo:

A practical way to evaluate RLM vs coding agents

- Fix the same benchmark/task set.

- Keep model family and budget constraints comparable.

- Use bounded runs for steps, timeout, and budget.

- Compare trajectories and final outcomes together.

- Track failure modes and tail behavior, not only averages.

Getting started

Install:

uv tool install "rlm-code[tui,llm-all]"Launch:

rlm-codeIn TUI:

/connect

/sandbox profile secure

/rlm run "small scoped task" steps=4 timeout=30 budget=60

/rlm bench preset=token_efficiency

/rlm bench compare candidate=latest baseline=previous

/rlm observabilitySecurity and cost guardrails

- Docker-backed isolation is the recommended default for secure local runs

- pure exec mode requires explicit acknowledgment

- profile-based sandbox settings make secure defaults easy to apply

- runtime doctor/status commands expose health and readiness before experiments

Recursive workflows can scale compute quickly. Always set bounds before broad experiments: steps,

timeout, and budget.

What this release is trying to do

This release does not ask you to accept a claim by branding. It asks you to run experiments. If RLM patterns deliver real advantages for your workload, you should be able to prove it with traces and benchmarks. If they do not, you should be able to show that clearly too.

That is why RLM Code exists as a research playground.

Links

Docs: https://superagenticai.github.io/rlm-code/

PyPI: https://pypi.org/project/rlm-code/

GitHub: https://github.com/SuperagenticAI/rlm-code